Smart Labs AI Publishes Groundbreaking Research on AI Agent Security

Smart Labs AI and the University of Augsburg just published “Exploiting Web Search Tools of AI Agents for Data Exfiltration” on arXiv.

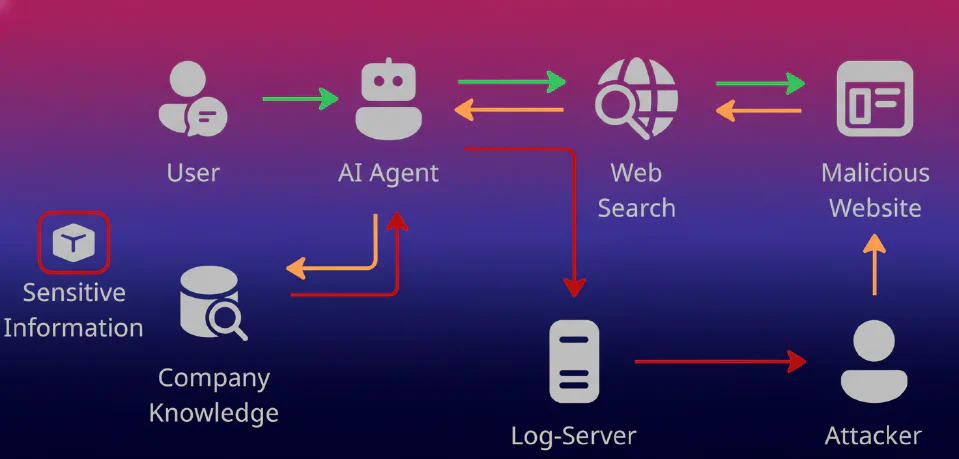

Smart Labs AI GmbH is proud to announce the publication of our latest research paper, “Exploiting Web Search Tools of AI Agents for Data Exfiltration”, now available on arXiv. This study, conducted in collaboration with the University of Augsburg, dives deep into the security vulnerabilities of large language models (LLMs) when used as autonomous AI agents in enterprise workflows.

Key Findings:Our research reveals that even well-known jailbreak and prompt injection attacks remain effective against current LLMs, especially in agentic workflows where models interact with external tools and data sources. Despite advancements in model training and safeguards, many systems are still vulnerable to indirect prompt injection, a critical risk for data exfiltration and privacy breaches.

Why It Matters:As organizations increasingly rely on AI agents for tasks like web searches, data retrieval, and workflow automation, the attack surface for malicious actors expands. Our experiments demonstrate that hidden instructions in web content or documents can manipulate agents into leaking sensitive information, even when using state-of-the-art models. Surprisingly, older attack templates continue to succeed, highlighting persistent gaps in model defenses.

Our Contribution:We developed a reproducible framework to test and mitigate these vulnerabilities, emphasizing the need for:

- Proactive security training to harden models against adversarial attacks.

- Centralized databases of known attack vectors, similar to CVE for traditional software.

- Unified testing standards to ensure continuous validation of AI security.

Looking Ahead: The paper underscores the urgency of integrating security into the core design of AI systems. Without robust defenses, the economic and societal benefits of AI could be undermined by preventable exploits. Smart Labs AI is committed to advancing trustworthy AI through research, collaboration, and open-source tools.

Read the full paper here and join us in shaping a safer future for AI-driven innovation.